Understanding Differential Privacy in Trust Graphs

In the growing landscape of digital privacy, the complexities of how we share and protect our personal information are more crucial than ever. Recent developments in differential privacy (DP) offer promising new frameworks to facilitate this process, specifically through what is termed as Trust Graph Differential Privacy (TGDP). This innovative model addresses the varying levels of trust users have in their relationships, thus allowing a more nuanced approach to privacy.

What is Differential Privacy?

Differential privacy is a statistical method that provides a way to maximize the accuracy of queries from statistical databases while minimizing the chances of identifying its entries. It ensures that the output of a query will not change much, even if a single individual's data changes. Traditionally, there are two models of DP: the central model, where a trusted database curator processes user information, and the local model, where user devices themselves handle this privacy protection. The local model is appealing due to its reduced reliance on trust but often suffers from reduced effectiveness.

The Role of Trust in Data Sharing

In reality, the way people share data is influenced by the level of trust they have in each other. For instance, Alice might willingly share her location with a close friend Bob, but not with an acquaintance or stranger. This concept illustrates that the privacy needs of individuals can be complex and varied, highlighting the limitations of traditional DP which often revolves around binary trust assumptions.

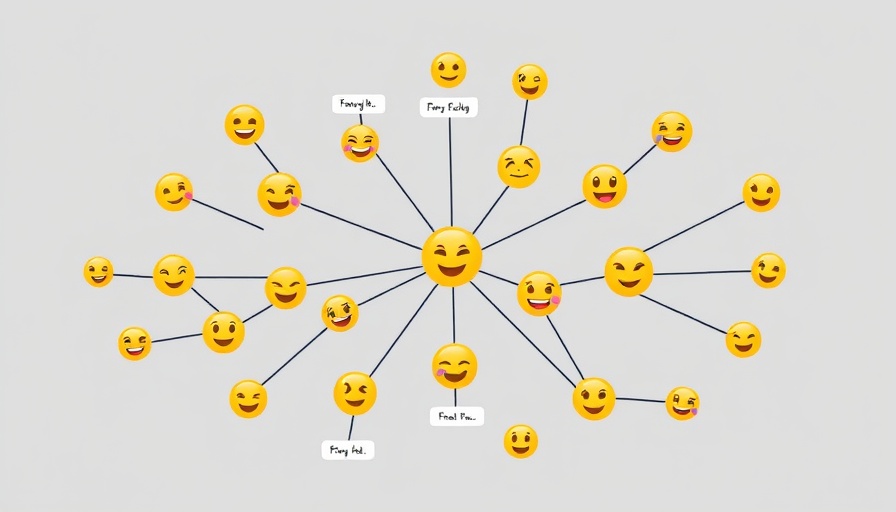

Introducing Trust Graphs

The Trust Graph model brings a new perspective to privacy frameworks by representing relationships among users graphically. In this model, vertices represent users while edges represent the trust relationships between them. For example, if Alice trusts Bob, then data shared between them is seen through the lens of this mutual trust. This approach allows TGDP to provide privacy guarantees for messages shared between trusted circles while maintaining stringent confidentiality from untrusted parties.

How Trust Graph Differential Privacy Works

At the heart of Trust Graph Differential Privacy is the concept that any message exchanged between a user and untrusted individuals should remain indistinguishable, even if one user's data changes. As illustrated in a trust graph example, if Alice shares her location with Bob, then Bob shares it with Carol and Dave, the privacy framework guarantees that Carol, Dave, or Eve could not piece together who Alice is or her location, even if they pooled their data together. This innovative mechanism creates a bridge between the central and local DP models, allowing for both privacy and usability.

Implications for AI and Data Sharing

The implications of TGDP can extend into the realms of AI and data-sharing platforms, making it an essential consideration for the future of AI education and business networking. As AI evolves, understanding the intricacies of user trust and privacy will be paramount for developing robust systems that cater not only to technical efficiency but also to ethical considerations around data usage.

Embracing a New Privacy Paradigm

As these innovations in differential privacy continue to evolve, embracing frameworks like TGDP will be critical for AI professionals and businesses alike. Engaging in communities that focus on AI innovations and updates can equip individuals with vital knowledge in navigating this new privacy landscape. Through proactive learning and adaptation, professionals can better understand how to utilize AI tools while ensuring user privacy remains a top priority.

As technology continues to reshape the way we connect and share information, understanding and applying frameworks like Trust Graph Differential Privacy is essential for anyone involved in the AI sector. Stay informed and explore AI learning platforms, so you can be at the forefront of this rapidly evolving field.

Add Row

Add Row  Add

Add

Add Row

Add Row  Add

Add

Write A Comment