The Shift Towards Privacy in AI Development

The evolution of artificial intelligence (AI) is increasingly intertwined with the need for privacy. Recent advances in machine learning (ML) underscore how significant data privacy has become amidst a global push for responsible technology deployment. Google's researchers highlight the importance of high-quality data for training large and small language models (LMs), while maintaining strict privacy standards.

What’s Driving Privacy-Preserving Domain Adaptation?

As the usage of AI tools surges—especially on smartphones—users demand not just functionality but also assurances that their data is handled with care. Google’s Gboard illustrates this perfectly. The typing application relies on both compact language models and advanced LLMs to cater to user needs. The blend of these technologies can significantly enhance user experience, but doing so while respecting privacy is paramount.

A Look at Synthetic Data’s Role in AI

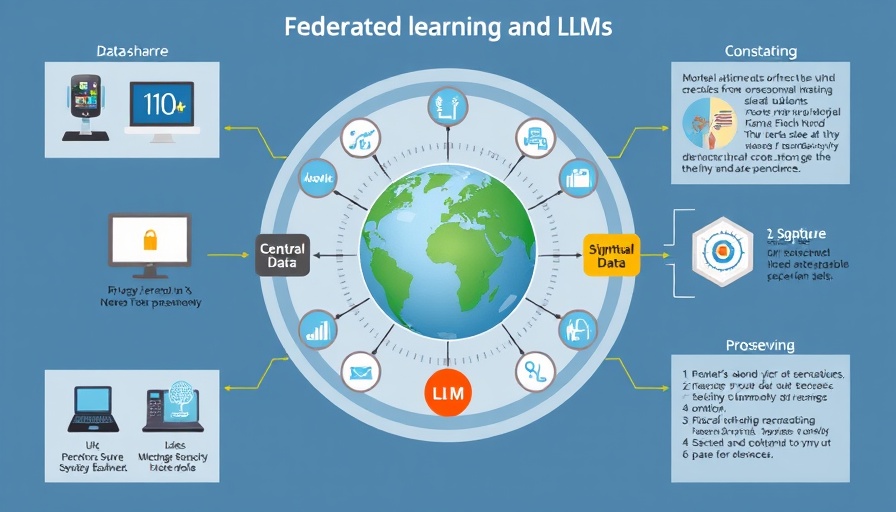

Synthesizing data has emerged as a solution to power LMs without compromising user privacy. In projects like Gboard, researchers employ a combination of synthetic data and federated learning (FL) to gather learnings from user interactions without accessing their personal data directly. This approach means models can be refined based on the insights gleaned from public and private data without ever retaining the data itself. The focus here is on data minimization and anonymization, ensuring privacy while continually enhancing service models.

Federated Learning and Its Real-World Impacts

Federated learning (FL) with differential privacy (DP) has proven essential for training models in a privacy-preserving manner. When combined with techniques that allow models to adapt based on user interactions, FL achieves remarkable results—like a reported 3% to 13% improvement in mobile typing performance. These models process information largely on the user's device itself, reducing the risk of sensitive data exposure and protecting individual privacy rights.

Why AI’s Future Depends on Privacy

As machine learning and AI technologies become more prevalent, the emphasis on privacy will only intensify. For businesses, transparency and compliance with privacy regulations are no longer optional; they are vital to maintain consumer trust. Innovations in AI, like those at Google, aim to show that high functionality does not have to come at the expense of user privacy. Going forward, stakeholders must continue to support efforts that prioritize ethical AI development.

Act on What Matters: Embrace the Future of AI Responsibly

As the AI landscape evolves, understanding the balance between functionality and privacy is crucial for professionals and businesses. Staying informed about developments in AI technologies, like those that preserve user privacy, will empower stakeholders to make more informed decisions about their use of these tools. Consider joining a community that focuses on AI education and networking events that discuss implications and innovations in the field. Knowledge sharing in an AI-focused community can spearhead understanding of these important trends.

Add Row

Add Row  Add

Add

Write A Comment